We recently wrapped up our first AI Hackathon at Tines. I was delighted to be part of the winning team, a cross-functional group spanning product, customer support, and solutions engineering. Together, we built a Content Review Bot that speeds up how we create and update our product content.

The workflow runs automatically in the background to track product releases, flag outdated content, and draft content updates with human-in-the-loop guardrails.

The challenge: Keeping product content up to date

At Tines, we’re shipping new features constantly (we’ve shipped over 300 updates so far this year). Just as fast, we’re creating content that highlights these features across on-demand videos, webinars, blog posts, product docs, web pages, and university material.

Keeping all that content current is no small task. Information goes out of date quickly and even tracking what needs to be refreshed is incredibly time-consuming.

When public content falls behind the product, it doesn’t just look sloppy. It creates real business risks.

Customers can lose trust. Adoption can stall. Feature expansion can be blocked.

Often, we discover outdated content when a customer flags a mismatch between what they see in the product and what they are reading. That pain point is what inspired us to build the Content Review Bot.

The solution: A bot that flags product updates and drafts content

Since the focus of this hackathon was on AI, we wanted to create a system that let AI do what it was good at, and helped humans do the same.

We built the workflow in Tines to support content creators who manage written help content in Tines Explained. This is connected to Intercom, our help center platform. We wanted to start with Tines Explained first to get a proof of concept working before moving on to other platforms, outside of Intercom, and other formats, like video scripts.

This runs automatically in the background saving time and mental load because we no longer need to manually start the process for every product release. Instead, we’re notified when it happens.

You can import the pre-built workflow here:

Review Intercom content with product updates

Find and flag out-of-date content on Intercom based on product updates. Create a case for tracking with draft content.

Created by

Angie Ruhstorfer, Yanni Hajioannou, Sif Baksh and Danielle Swanser

Here’s how it works:

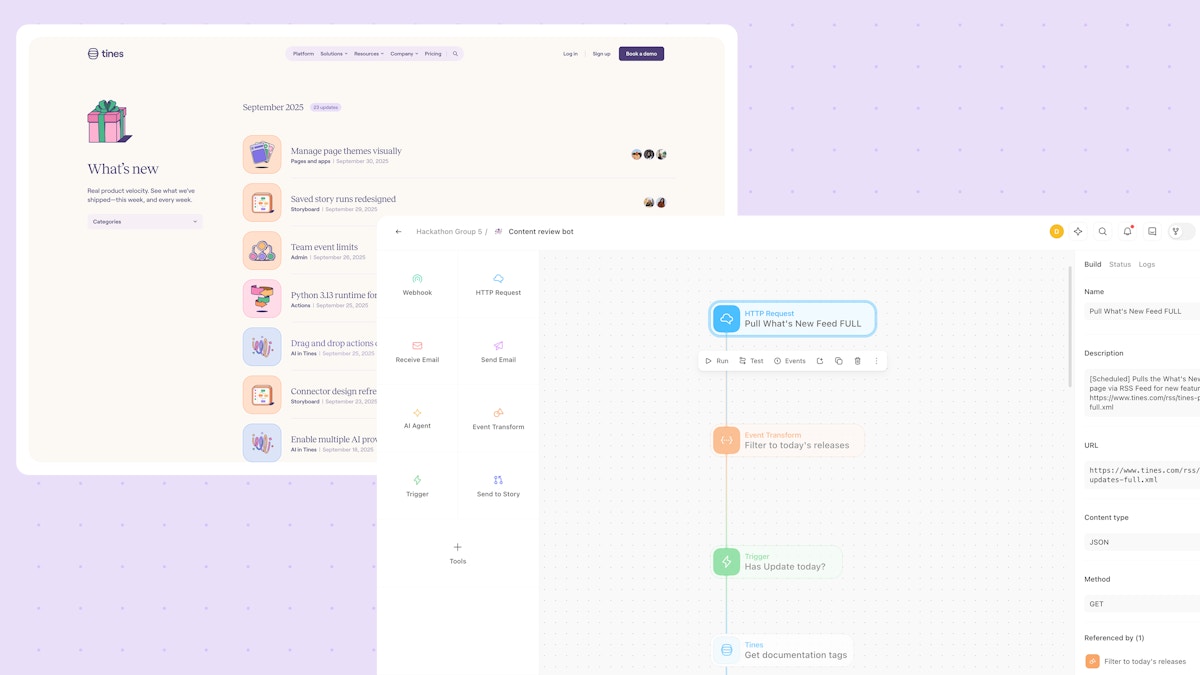

Monitor for new product releases and updates

Luckily for us, we post product updates to our “What’s New” feed when they’re released. This made it easy for us to have a single source of truth for the bot to pull information from on a regular basis. We then filter for today’s releases only. If no updates have been posted today, the rest of the workflow doesn’t run.

Organize content sources

We’ve stored documentation tags in a Tines resource which we can feed into our AI agent later in our story. These tags correspond to article tags we use in Intercom to help us organize our content. Tags categorize updates by feature and persona, which helps the bot understand what each update relates to.

It then fetches our content collections from Intercom and the event data is cleaned up so we’re only using the data we need – collection ID, name and description.

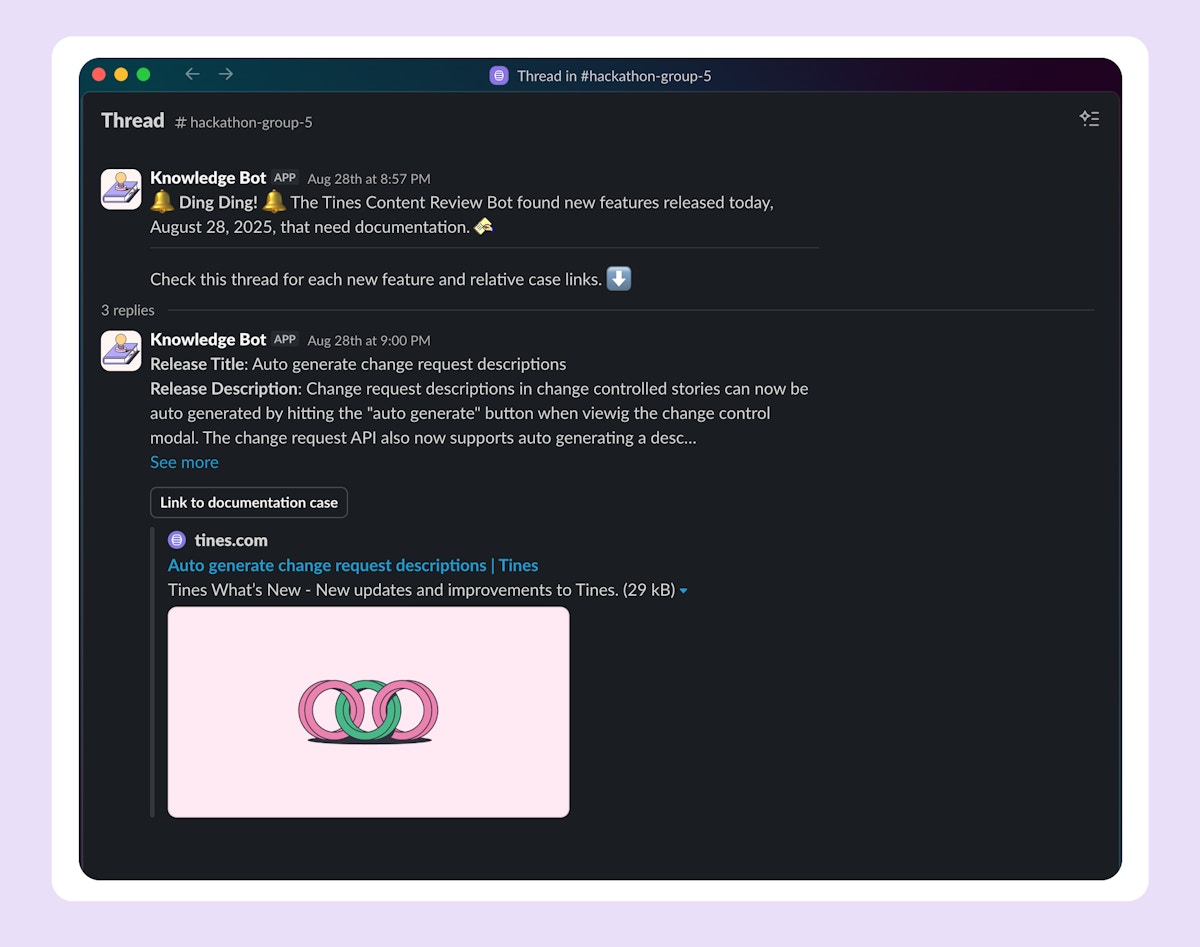

Notify the team

When new releases are found, the bot sends a Slack notification to the documentation team letting them know there are new releases and content might be impacted. Later on in the workflow, it follows up in the same thread with each feature and a link to its relative Tines case to track progress.

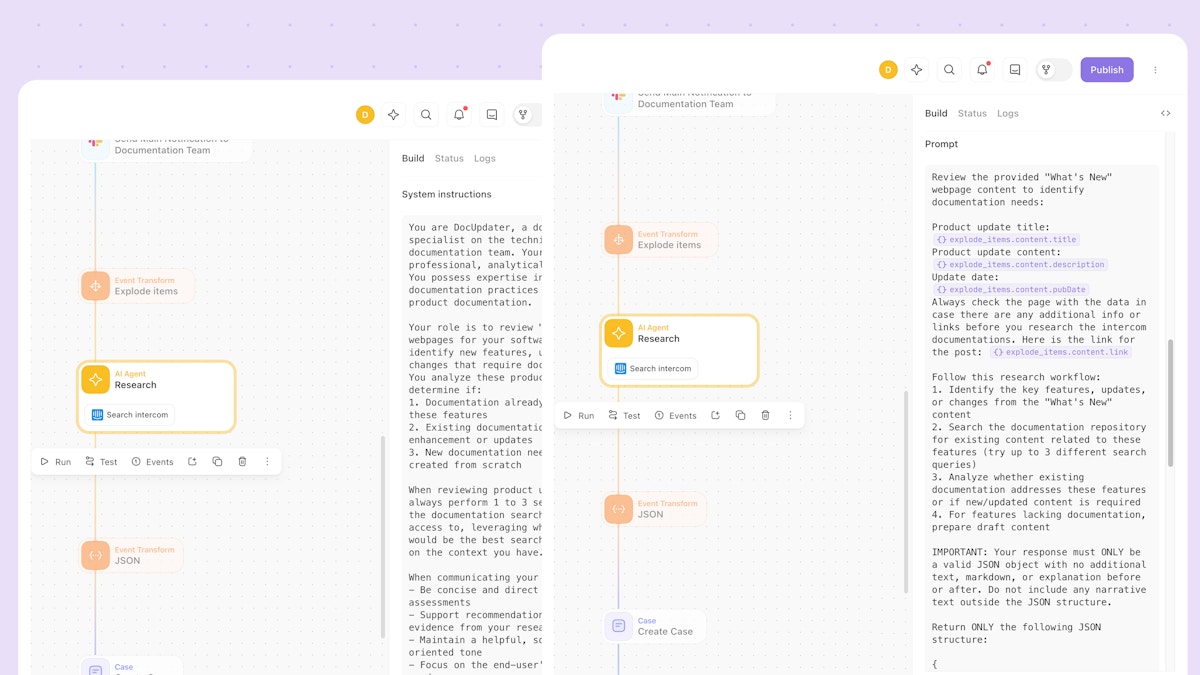

AI agent flags updates and drafts content

Next, we’ve set up an AI agent to act as a documentation specialist doing research on the update. For each release, it looks to see whether documentation already exists, needs to be updated, or should be created from scratch.

If an article already exists, it’ll find it and draft an update that covers the new functionality based on the existing content. If nothing exists, it drafts a new article to give the team a head start. It performs up to three searches to check coverage, then reports back with concise, evidence-based recommendations.

We’ve given it clear system instructions to follow for finding, updating, and drafting help content.

This keeps the process consistent and reduces errors. We’ve followed the guidelines laid out in this guide for writing prompts to ensure the instructions are clear and include specific details about the goal of the task.

Finally, it assigns an impact score (low, medium, or high) to help us prioritize the work. This is useful because not all product releases carry the same weight. For example:

1-3: Low impact - Minor feature or change with limited user visibility

4-7: Medium impact - Notable feature or change that affects some workflows

8-10: High impact - Major feature or change that significantly affects user experience

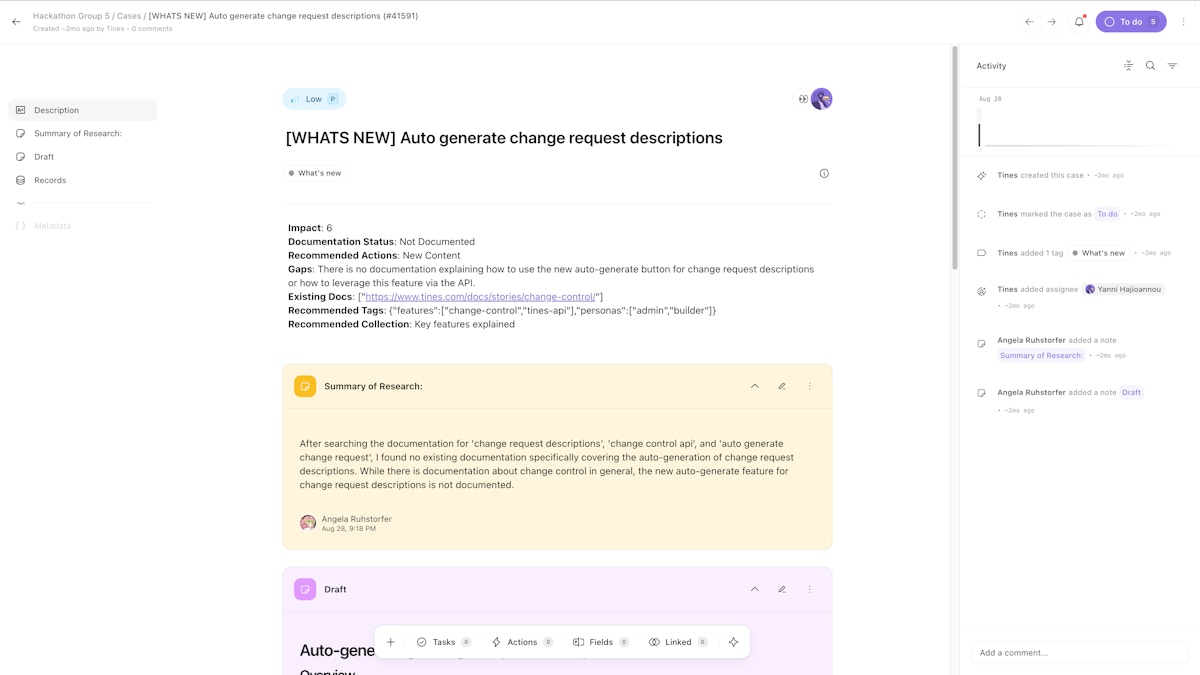

Generating the case and content draft

The findings are then logged in a case which the documentation team can then work on. The information automatically added to the case includes:

The impact score

Documentation status (whether an article exists already or not)

Recommended actions

Gaps in documentation

Existing docs (if available)

Recommended tags

Recommended collection

It also includes a summary of the research it performed explaining why the agent provided those specific recommendations. This is really helpful for the team to understand how the agent came to the conclusion that it did, and if there are errors, we can use this to inform edits to the original prompt.

The agent then drafts a first pass of the article with full formatting which a human can review and edit before the article is created or updated.

Closing the loop in Slack

The team gets sent the case via a Slack message, so we can quickly jump in, gather context, see the draft, and edit it without managing multiple tabs.

A process that would have taken us quite a while to review, research and draft is now served to us to review and hit publish.

Why this matters

The goal isn’t to replace human judgment. It’s to automate manual muckwork, so editors can focus on refining content that drives product understanding and usage. The AI agent handles the heavy lifting, including scanning updates, flagging gaps, and drafting initial content. Humans decide what’s worth publishing and polish the final output.

This has resulted in:

Faster response to product changes

Less risk of outdated or missing documentation, helping product adoption and expansion

More time for content creators to focus on strategic work instead of chasing updates

What’s next?

The Content Review Bot has already helped us respond to product changes faster and keep our documentation tightly aligned with what we ship.

Right now, the bot focuses on written content in Intercom. Next, we want to extend it across other formats such as documentation, blog posts, and video scripts so every customer-facing channel stays up to date. We’ll share more as we build this out so customers can put it to work in their own workflows.

Explore more pre-built workflows in the Tines library.