At Tines, we rely heavily on AWS Elastic Container Service (ECS) to power our workflow automation platform. For a couple of years, we used Fargate as our default compute layer – offering simplicity and removing the need to manage underlying hosts. However, as we scaled, we started hitting the edges of what Fargate could reliably offer. This is the story of why we migrated our backend services to an EC2-backed ECS Capacity Provider and what we learned along the way.

Why we needed change

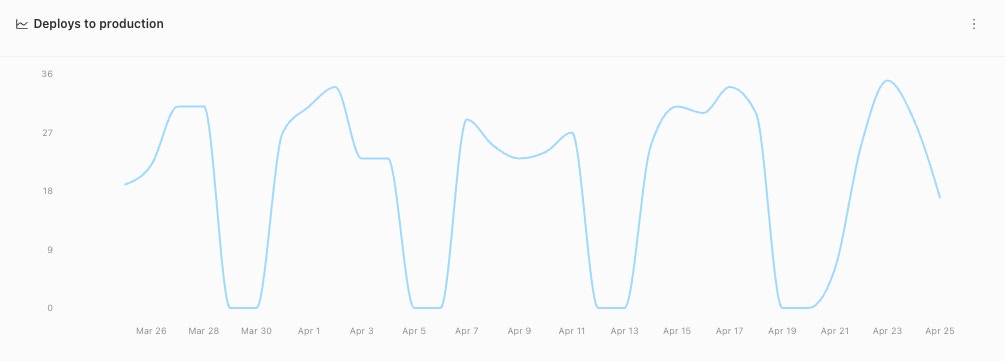

Fargate's promise is simplicity, and we love simplicity at Tines. However, that abstraction started causing friction, especially at our scale (deploying 20-40 times a day to about 90 ECS clusters, churning hundreds of tasks and thousands of containers each time). We really like deploying quickly and often, so slowing down was not an option.

1. Deployment roulette & stale code: We increasingly faced deployment failures due to "Fargate capacity unavailable" errors, particularly during peak times. We also learned that Spot Fargate wouldn't fall back to On-Demand capacity or retry if Spot instances were interrupted during a deployment. Instead, ECS marked the deployment as failed and rolled back, sometimes leaving services in a mixed state with some tasks running stale code. This instability wasn't just annoying; it impacted our deployment velocity and introduced operational risk. Even avoiding Spot entirely only halved these issues, and adding more availability zones would not have completely solved the underlying capacity acquisition problems according to AWS support. Perhaps some of the features on the Fargate roadmap will address these issues.

2. Performance ceiling: While Fargate offers easy scaling, we lacked control over the underlying hardware. We wanted access to the latest generation CPUs and the ability to fine-tune instance capabilities beyond the simple vCPU and Memory knobs Fargate provides, potentially improving performance for some of our compute-heavy workloads. Early benchmarks showed up to 45% faster execution for CPU-bound operations such as Event Transformation Action Runs.

3. Isolation and flexibility: More importantly, EC2 offered the flexibility to optimize instance types for our specific workloads (memory-hungry Ruby on Rails containers), more isolation (i.e. fewer “noisy neighbor” issues. At least fewer neighbors that we don’t control), finer control over networking and the ability to handle interruptions more gracefully. For instance, we later learned that the hard limit of 2 minutes on Fargate task draining (stopTimeout) would have been a problem for some longer jobs we wanted to roll out.

4. Cost: Preliminary analysis suggested potential cost savings with EC2. While cost wasn't our primary driver (since the biggest line item in our AWS bill are databases anyway), our analysis suggested potential savings of about 15% on compute through use of Spot instances and EC2 Savings Plans.

ECS on EC2

After considering alternatives (like staying with Fargate but adding a third availability zone and staggering deployments, or moving to Kubernetes), we landed on replacing Fargate with an EC2 Auto Scaling Group (ASG) managed via an ECS Capacity Provider. This felt like the right balance: it leveraged our existing ECS expertise and tooling (versus, say, moving to Kubernetes) while granting the control we needed.

The biggest anticipated win was deterministic deployments: the certainty that a successfully completed deployment actually meant the new code was running everywhere, eliminating the risk of Fargate rollbacks.

All of this at the expense of more management and operational overhead.

Interestingly, we originally ran Tines on EC2, and decided to move to Fargate in 2022. Then back to EC2 in 2024? Well, we didn’t exactly move "back to EC2", since we used to run containers directly on EC2. This move was sideways rather: essentially swapping capacity providers on ECS. All of our services, tasks and containers would remain largely the same. All of our deployment strategies and orchestration would be kept as-is as well. We also decided early on to stick with VPC networking mode for our tasks, mirroring our Fargate setup. This maintains network isolation per task (crucial for some internal services) and keeps security group management consistent. The only new thing was to start managing the underlying infrastructure where our containers live.

Building the EC2 fleet

Here are some key decisions we made when building our EC2 Capacity Provider:

Instance strategy

Cost modeling pointed towards instance types like t3a.large or m7i-flex.large with two tasks per instance) for good price-performance. However, relying on a single instance type felt risky for availability, especially with Spot. Due to some CPU and networking bottlenecks, we decided to move off t and -flex after trying them for a while. We opted for a Mixed Instances Policy in our ASG.

Our primary targets were

r7i.largeand similar (r6i,r6a,r5, etc., as fallback).We configured an Instances Distribution aiming for a significant portion (e.g., 30%+) of our fleet to run on Spot Instances using the

capacity-optimized-prioritizedallocation strategy, with a small base capacity of On-Demand for stability. This gives us cost savings while mitigating Spot interruption risk by having diverse instance types available.We enabled Capacity Rebalance to proactively replace Spot instances that AWS signals are at high risk of interruption.

EC2 is less efficient than Fargate at capacity allocation, and this effectively increases the cost. Memory-to-CPU ratio of EC2 is fixed and may not exactly match our needs, we typically need some extra room in the ASG, and we do not always pack tasks to instances perfectly.

Hardening the hosts and UserData:

Amazon Linux: We use the latest ECS-optimized Amazon Linux and make minimal modifications to it.

Security Hardening: We blocked container access to the EC2 Instance Metadata Service (IMDS) to prevent potential security vulnerabilities.

Centralized Logging: We set up the CloudWatch Agent to collect important system logs and metrics while being mindful of noise and unnecessary data.

Monitoring: We enabled GuardDuty Runtime Monitoring for threat detection on all instances.

The management trade-off:

Moving to EC2 undeniably means taking on more operational responsibility. We are now directly responsible for OS patching (handled via regular instance rotation), monitoring host health (metrics/alarms for host health, disk usage, etc.), ASG autoscaling and managing the ASG configuration. This was a conscious trade-off for the benefits we sought.

However, during and after the migration, we encountered unanticipated operational hurdles. We needed considerable trial-and-error to fine-tune ECS Agent configurations, discovering that several crucial features weren't enabled by default:

Graceful Spot termination (

ECS_ENABLE_SPOT_INSTANCE_DRAINING=true), for spot instances to handle interruptions smoothly.Efficient Docker image pulling (

ECS_IMAGE_PULL_BEHAVIOR=prefer-cached), to avoid unnecessary networking and CPU usage during deployments. This was causing a lot of issues and it took us months to discover the root cause.ENI trunking: To our surprise, the number of ENIs one can attach to EC2 instances was not enough for us. For example, even though we would like to run four tasks on an

r7i.xlargeinstance, only 3 ENIs are available on it by default. To add support for more, ENI trunking needs to be enabled both on the ECS agent and the Cluster. Unfortunately this is easier said than done, and the mechanics to do it scale involve an additional CloudFormation Custom Resource, IAM permissions and a dedicated Lambda.

import * as autoscaling from "aws-cdk-lib/aws-autoscaling";

import * as ecs from "aws-cdk-lib/aws-ecs";

export default class Ec2CapacityProvider extends Construct {

constructor(scope: Construct, props: Props) {

// ...

if (props.enableEC2) {

const autoScalingGroup = new autoscaling.AutoScalingGroup(this,

"AutoScalingGroup",

{

vpc: props.vpc,

machineImage: ecs.EcsOptimizedImage.amazonLinux2023(),

desiredCapacity: props.desiredCapacity,

instanceType: new ec2.InstanceType("r7i.large"),

}

);

const capacityProvider = new ecs.AsgCapacityProvider(this,

"AsgCapacityProvider",

{

autoScalingGroup,

}

);

props.ecsCluster.addAsgCapacityProvider(capacityProvider);

}

// ...

}

}Time to roll this out

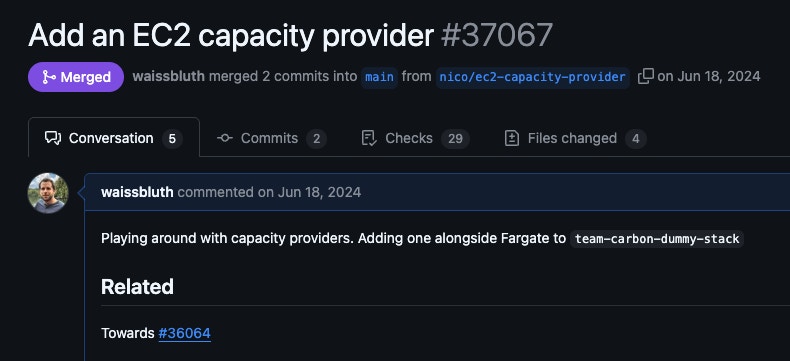

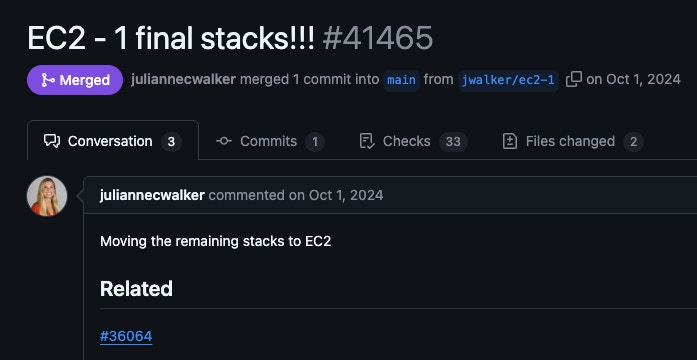

We couldn't afford a "YOLO" migration. We needed to deploy this slowly and carefully, making sure we could roll back (or even cancel the project) at any step of the way. We were already heavily relying on AWS CDK to manage our infrastructure, and this simplified the development and rollout of this project.

We followed a multi-stage process for each environment:

Provision: Deploy the new EC2 Capacity Provider and ASG, starting at zero capacity.

Ramp up: Spin up tasks on the services rely on EC2, causing the ASG to scale up in response.

Shift traffic: Register the new services with our Load Balancers (without de registering the old ones), to serve traffic and handle workloads simultaneously from both sets of services.

Decommission: Once all traffic was served by EC2 and stability was confirmed, scale the Fargate services down to zero and eventually remove them.

This allowed for zero-downtime, reversible transitions that turned out surprisingly well.

(Note: To preserve original cluster names post-migration, our actual process involved temporarily creating parallel clusters, switching over, deleting originals, and then recreating them with the original names. So in reality there were eight steps.)

Results are in. Did we meet our goals?

Deployment stability: Kind of. The primary goal of achieving deterministic deployments was partially met. While we eliminated the deployment failures, we did start seeing some new, unexpected kinds of failed deployments (e.g., task startup timeouts related to CPU and Networking bottlenecks) which we have largely mitigated.

Performance: We observed noticeable performance improvements. While exact figures vary by workload, key metrics like P95 request latency and job throughput showed positive gains: up to 10% decrease in web latency and about 30% lower job processing times.

Cost: We saw moderate cost savings, around 5%. The combination of right-sized instances within the Mixed Instances Policy and our Spot strategy (aiming for >30% Spot utilization) brought our compute costs down, but we underestimated the bin-packing inefficiency tax.

What did we learn?

Our journey taught us valuable lessons. We learned firsthand that the management overhead associated with running EC2 at scale is real, requiring dedicated effort for monitoring, and scaling.

However, the flexibility gained by controlling the underlying instances proved invaluable, allowing us to optimize performance and tailor the environment to our needs.

This migration also highlighted some of the quirks inherent in ECS, prompting internal discussions about future infrastructure directions, perhaps even switching to Kubernetes – though that's a topic for another time. If we did, we would probably use the same EC2 capacity provider there!

Overall, the move was a success. We half-joke about moving back to Fargate any time a new EC2 issue pops up.

While it introduced manageable operational complexities, the significant gains in reliability, flexibility and performance outweigh the challenges (at least for now).

As usual, there are no one-size-fits-all solutions in the world of Software Engineering. In fact, we still run some services using Fargate, and we recommend our self-hosted customers to do so as well.