For most IT operations teams, capacity management is a balancing act. Too much capacity and costs spiral; too little and users feel the impact before you do. On paper, scaling should be simple. In reality, it’s anything but. Most teams still scale manually – waiting for alerts, logging into consoles, adjusting resources, and hoping they’re not overdoing it.

It’s a pattern that feels safe because it’s familiar, but it’s quietly expensive.

Manual capacity management eats time, drains focus, and creates invisible waste that compounds week over week.

The real cost of manual scaling

Manual processes were fine when infrastructure was static. But hybrid and multi-cloud environments don’t stand still. Workloads scale up and down dynamically, traffic spikes unpredictably, and new applications appear overnight.

The challenge isn’t a lack of tools. It’s the lack of connection between them.

Each system provides insight in isolation; monitoring dashboards, alerting tools, capacity trackers. Practitioners can see the problem forming, but the response still depends on a person logging in, approving a change, or running a script. That delay shows up everywhere, in latency, in unplanned overtime, and in ballooning cloud costs.

Even well-tuned monitoring setups can’t overcome the lag created by fragmented workflows. When metrics live in one tool, approvals in another, and provisioning in a third, time slips away in context-switching. Multiply that across dozens of services, and you’ve got a system that burns hours to save seconds.

Waste, latency, and the illusion of safety

Overprovisioning feels like insurance: add a little extra capacity “just in case.” But those “just in case” instances quietly pile up, turning into idle resources and runaway costs. Underprovisioning on the other hand, can feel like efficiency. That is until users feel the slowdown, incidents spike, and SLA penalties kick in.

Neither approach is sustainable. Both stem from the same root cause: disconnected systems and manual decisions.

The more complex your environment, the more impossible it becomes to manage capacity by hand.

And this isn’t a people problem, it’s a workflow problem. The systems designed to help us manage complexity have become part of the problem, forcing IT to spend more time navigating tools than improving them.

The opportunity with intelligent workflows

When capacity management becomes intelligent, efficiency compounds. Intelligent workflows connect signals, decisions, and actions into a single continuous process, one that runs as fast as your infrastructure demands require.

Here’s what that looks like in practice:

Monitoring tools detect a spike in resource utilization.

The workflow validates the alert, checks dependencies, and decides if scaling is warranted.

Provisioning actions execute automatically, adding or removing capacity as needed.

The workflow closes the loop, verifying performance and logging every step for audit and reporting.

This is orchestration in action. A continuous, intelligent flow that keeps systems responsive and resilient without manual oversight.

How to start small

You don’t need to overhaul your stack to start. Intelligent workflows evolve from the groundwork you already have in place.

Trace your current path from alert to action. Document where time is lost. Is it in waiting for approvals, switching tools, or manually validating data?

Set clear, measurable thresholds. Define when action should happen. For example: “If CPU > 80% for 10 minutes, initiate scale-up.”

Connect what you can. Use simple scripts, APIs, or native integrations to link monitoring, provisioning, and ticketing.

Start small. Automate repetitive, low-risk tasks like scaling specific workloads or triggering cleanup reminders. Over time, these small automations can evolve into intelligent workflows, where you can combine deterministic reliability, human-in-the-loop oversight, and agentic adaptability to match the task’s complexity, as well as your comfort level.

Log everything. Build audit trails from day one. Visibility is your best insurance against both compliance risk and human error.

Each incremental improvement pays off: fewer manual touchpoints, faster adjustments, and less waste.

Efficiency that compounds

As I mentioned before, when capacity management becomes intelligent, efficiency will compound. Systems adjust faster, costs stabilize, and teams reclaim the time they once spent firefighting;

Latency drops because systems scale before users feel it.

Cost stabilizes as idle resources are decommissioned automatically.

Teams gain back hours once lost to manual effort and firefighting.

The result isn’t just lower spend, it’s also control. Intelligent workflows turn capacity management from a recurring headache into a reliable, predictable process that runs as smoothly.

Example pre-built workflow

We have multiple pre-built workflows in our Library to help you with intelligent capacity management, including the story below. Import this story to your Tines tenant and adapt it to meet your unique needs.

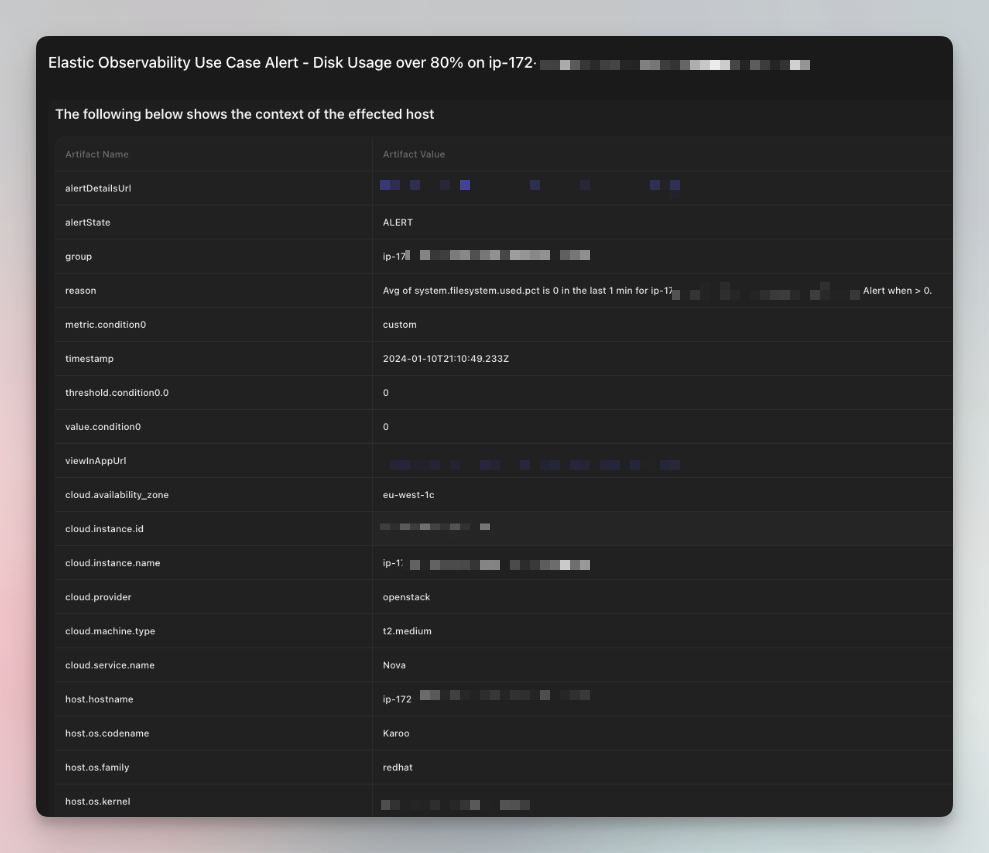

Identify and remediate high AWS EC2 Disk Usage with Elastic Observability and document with Tines cases

Receive Elastic Observability Alerts on an AWS EC2 instance's high disk usage performance over average time. This automated process generates a Tines case and solicits input from the administrator regarding the desired size upgrade. Subsequently, the instance is dynamically adjusted to the specified size, ensuring optimal performance.