Data leaks and information disclosure caused by employees is an issue security teams regularly contend with. Committing credentials to Github is one of the more well-known ways this issue arises. Recently, posting sensitive data on public Trello boards has also made headlines. In this post, we explore how security teams themselves often unintentionally expose sensitive company information.

Introduction

Cyber security teams analyze URLs and files to determine if they represent a threat to their organization. This requirement might arise while investigating a suspicious email sent to an executive staff member. Or while reviewing web traffic from an infected endpoint. File and URL investigations can be time-consuming if performed manually. As such, creative security engineers have developed a number of solutions to automate and streamline this process in a safe way. We call these tools “sandboxes”.

Many of the most popular sandboxes (see five common examples below) are free and made publicly available to security teams and researchers. These sandboxes are incredibly useful resources and all security teams should be aware of them, however, like every tool, when misused, they may actually case data leaks in your company.

Although the exact mechanics of each sandbox varies, broadly they operate something like this:

User (usually a security analyst) submits a suspicious file or URL to a sandbox.

Sandbox analyses the behavior of the submission (by opening the file or visiting the URL) and provides the user with analysis results allowing them to determine if the URL or file represents a threat.

Sandbox stores and makes publicly searchable the results of the analysis so other companies may inform and protect themselves.

How security teams can cause data leaks

The problem of leaked data arises when a user submits a legitimate URL or file which leads to or contains, sensitive information, to a sandbox. By design, sandboxes record and make this sensitive information public. Additionally, as many public sandboxes provide APIs allowing programmatic submissions, sensitive information being “sandboxed” inadvertently by security teams is increased. For example, at Tines we regularly see security teams sandboxing every URL in every email that comes from an external source to an employee. This is fantastic from a threat detection perspective, but unless filtering and redaction occur before sandbox submission, it’s almost certain that sensitive content will also be sandboxed.

To understand how widespread this subtle form of data leakage was, I spent a little time searching sandboxes for sensitive content. It’s important to point out that the services hosting the exposed content (Dropbox, Google Docs, etc.) are not at fault here. What happens to the URLs/emails after they are correctly sent to their intended recipient is largely out of their control. (The argument that some of this content should be behind additional authN/Z is outside the scope of this post.)

URLs containing email addresses

It’s not uncommon for URLs in emails to contain the recipient’s email address as a parameter. So, we started by looking at every URL that contained the string “email=”. Over a two-day period, we identified several hundred, unique, corporate email addresses.

Avoiding data leaks with automation

Password reset emails

Next, we searched for sandboxed URLs that contained strings which indicated the URL related to a password reset email. For example:

• “resettoken”

• “passwordreset”

• “reset_password”

• “new_password”

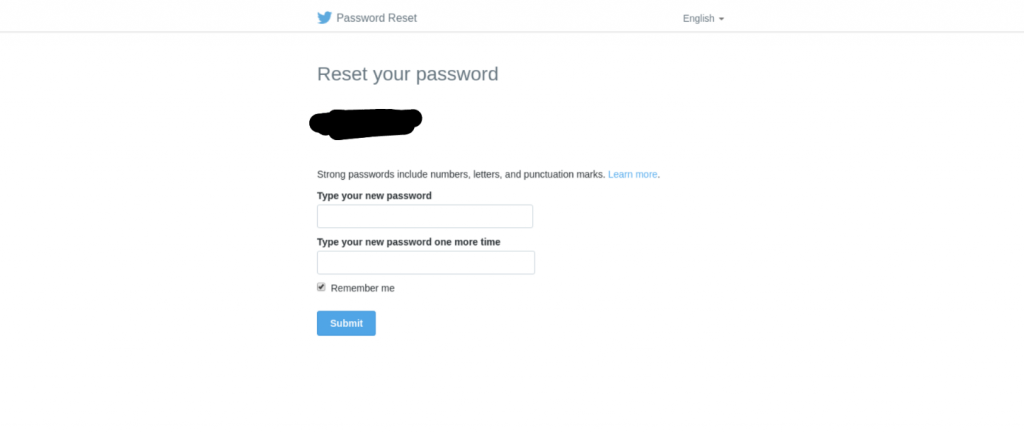

With a trivial amount of effort, we found around 50 still valid password reset links. Several of which were well-known enterprise services. Additionally, we found password reset links for enterprise social media profiles. This is an interesting attack vector for opportunistic ATOs, but maybe a little contrived for targeted attacks.

Avoiding data leaks with automation Screenshot showing compromised twitter account

File Sharing Services

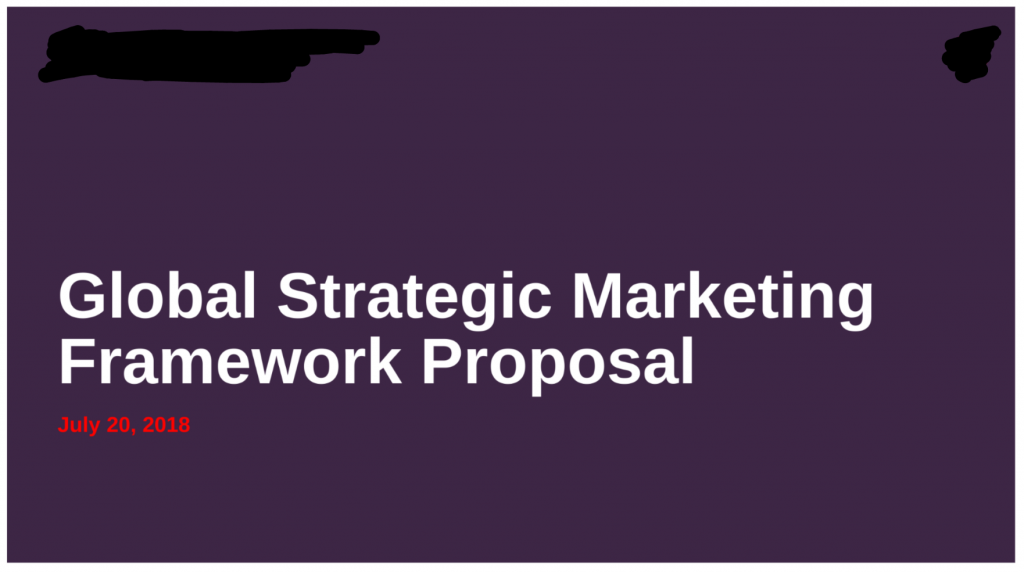

A familiar use case for file sharing services such as Dropbox, OneDrive, WeTransfer, etc. involves emailing a shared link to a file. A search for strings used in these links returned thousands of files with over-generous sharing settings, i.e.: “anyone with the link can access”. There were PPTs, docs, and several other files containing what appeared to be sensitive company information.

Avoiding data leaks with automation Screenshot showing leaked sensitive company content

Electronic Signature Services

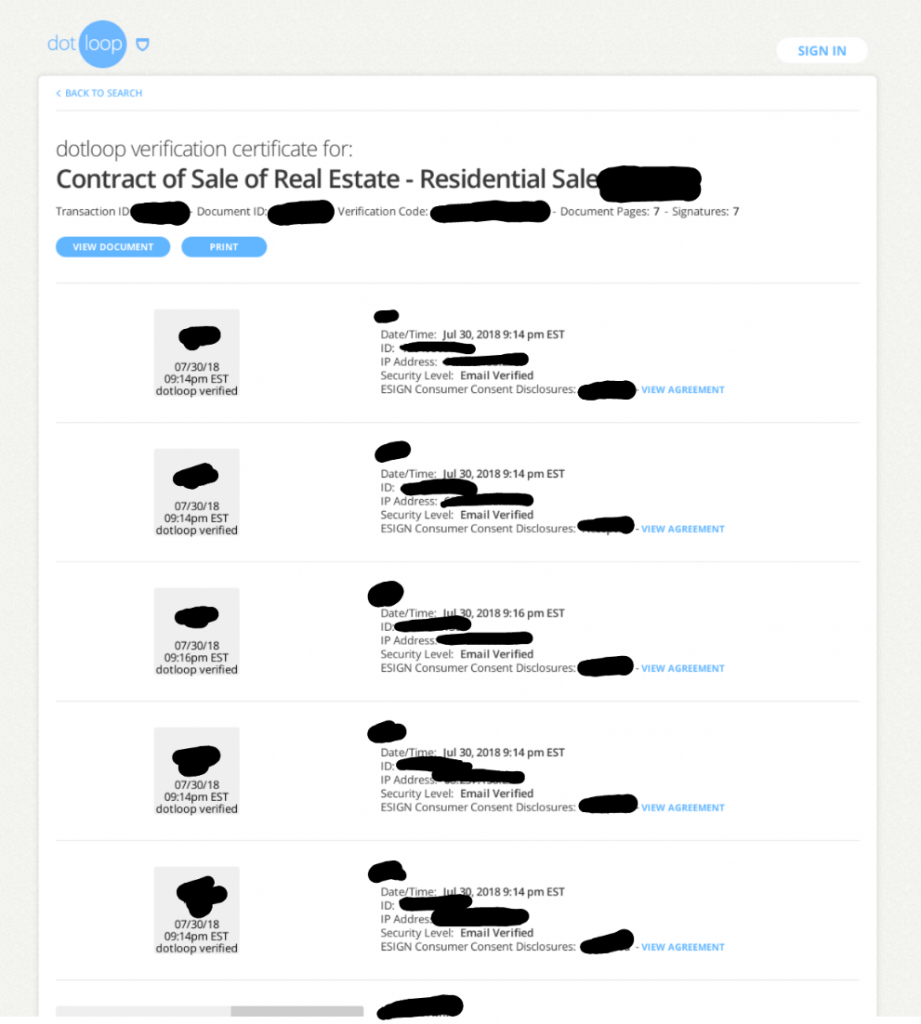

Services such as Adobe Sign, DocuSign, and DotLoop typically notify a user that they have a document awaiting signature. The notification email contains a link to a document, for example, a sales contract or NDA. I searched several sandboxes for signature links and found hundreds of documents (both signed and awaiting signature).

Avoiding data leaks with automation screenshot of leaked company contract

Avoiding data leaks with automation Screenshot of leaked residential sale contract

Avoiding data leaks with automation Screenshot of leaked purchase agreement

Conclusion

The increased availability of free and powerful URL scanners is a good thing. Sandboxes provide an accessible way for security teams, who are often resource-constrained, to quickly collect important context around suspicious URLs and files.

In addition, submitting public crawls provides a forensic snapshot that allows security teams to investigate common attack patterns and has even been known to provide valuable info on nation-state attacks. The purpose of this post is not to scaremonger or drive security teams to commercial, propriety sandboxes, but rather to shine a light on the risks security teams leveraging these valuable resources may not be aware of.

How to Avoid Automated Data Leaks

• Don’t sandbox URLs or files from senders/domains which you can confidently say will be legitimate.

• Some sandboxes provide a “private” feature to reduce the risk of data leaks. This completes the scan but does not store the results for public consumption.

• Before submitting to a sandbox, avoid data leaks by replacing sensitive information in URL parameters such as email addresses with benign placeholders.

• If you are a service provider who delivers sensitive content over email, consider subscribing to feeds of recent scans from public sandboxes. When sensitive content which you delivered was sandboxed, notify the original recipient. In addition, remove access to the leaked content.